Welcome to RouteScout - a moving collection involving media-centric bits and pieces for Spatial Ground Imagery and Corridor Patrol interests

Friday, October 25, 2013

Monday, October 21, 2013

3D Model or Stereo

3D Models - get ready stereoscope

"The TigerCub with Zephyr laser represents an extension of ASC's industry-proven family of 3D Flash LIDAR cameras. It breaks the lightweight barrier (<3lbs 3d="" advanced="" aerial="" all="" and="" applications.="" applications="" br="" business="" calculated="" camera="" cameras="" cloud="" co-registered="" concepts="" data="" development="" distortion.="" flash="" for="" ground="" he="" ideal="" inc.="" intensity="" invaluable="" is="" laux="" lidar="" many="" motion="" non-mechanical="" of="" on="" or="" output="" point="" president="" processing="" range="" real-time="" requiring="" said="" scientific="" small-3d="" that="" thomas="" tigercub="" vice="" without="">

Read more about Advanced Scientific Concepts, Inc. (ASC) Introduces the TigerCub 3D Flash LIDAR Camera with Zephyr Laser - BWWGeeksWorld by www.broadwayworld.com

Lidar

"The TigerCub with Zephyr laser represents an extension of ASC's industry-proven family of 3D Flash LIDAR cameras. It breaks the lightweight barrier (<3lbs 3d="" advanced="" aerial="" all="" and="" applications.="" applications="" br="" business="" calculated="" camera="" cameras="" cloud="" co-registered="" concepts="" data="" development="" distortion.="" flash="" for="" ground="" he="" ideal="" inc.="" intensity="" invaluable="" is="" laux="" lidar="" many="" motion="" non-mechanical="" of="" on="" or="" output="" point="" president="" processing="" range="" real-time="" requiring="" said="" scientific="" small-3d="" that="" thomas="" tigercub="" vice="" without="">

Read more about Advanced Scientific Concepts, Inc. (ASC) Introduces the TigerCub 3D Flash LIDAR Camera with Zephyr Laser - BWWGeeksWorld by www.broadwayworld.com

Lidar

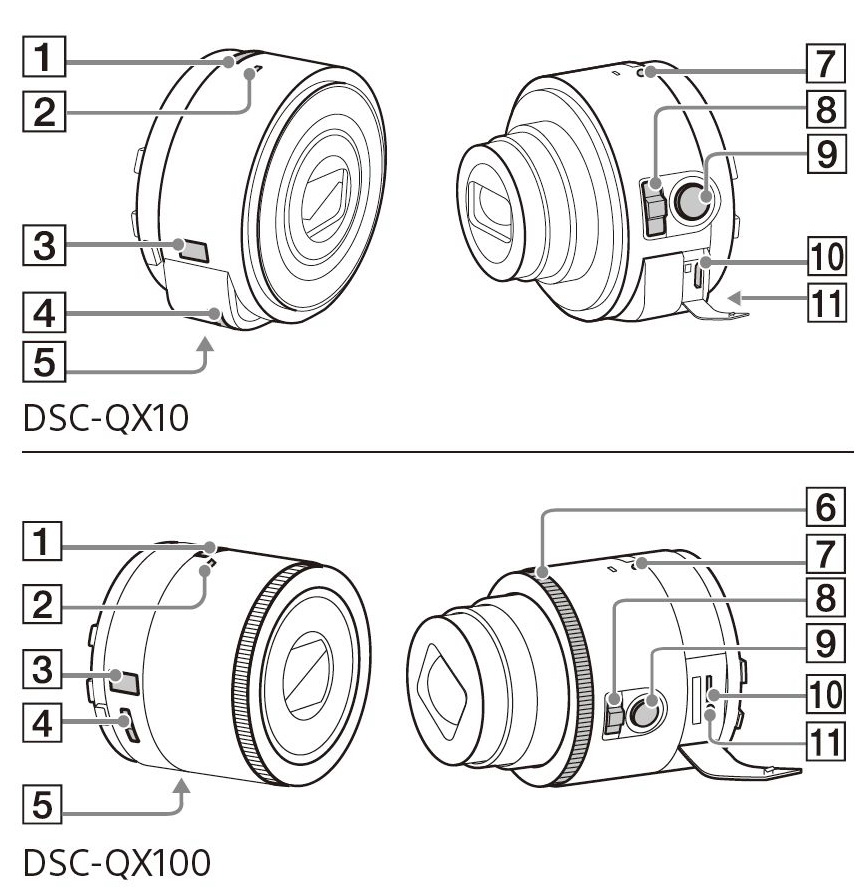

SONY Lens G

Sony Lens G Accessory Manual Leaks, Shows Off Hardware and Functionality

Droid Life: A Droid Community Blog by Tim-o-tato Yesterday

This week, leaked images hit the web of an upcoming accessory for smartphones from Sony, nicknamed Lens G. According to a newly leaked user manual for the lenses, there are two models, the QX10 and QX100. The QX100 will be the more expensive of the two, featuring the same sensor that is currently found in Sony’s RX100MII, which can be found on Amazon for about $750. As for the QX10, it will feature a 18 megapixel CMOS sensor that is found in Sony’s WX150.

Each lens will feature its very own power button, microphone, display panel, a belt hook, tripod, a zoom lever, shutter button, reset button and a multifunctional jack. For being such a little accessory, it looks like Sony is sparing no expense in making sure users will be able to take great shots with these while attached to your phone.

As of right now, we have our money on Sony announcing these lenses at IFA next month in Berlin. Until then, we have no details on a release date or pricing, but we are definitely excited to check them out.

Photosphere Bug

9

Every version of Android has launched with at least one headlining feature. As any true fan would know, the 4.2 camera brought with it a very cool new mode called Photospheres. While the initial hype has dropped off, the popularity of photospheres still continues to grow, thanks in part toimprovements in image quality and the addition of a Maps-based community designated for sharing the immersive images. We don't always want a location attached to our regular pictures, but it's pretty rare when we don't want our photospheres to be geotagged. After all, they are usually taken in public, wide-open spaces. Unfortunately, since updating to Android 4.3, quite a few people have found their photospheres lack geotagged coordinates.

As it turns out, there is an oversight in the latest Camera app that results in a failure to attach coordinates to the photosphere metadata as long as the device is set to a language other than English. As reported in the Issue Tracker, a number of languages cause the lack of data, but changing the phone's system language to English ensures that it works properly. Furthermore, switching back to any other language returns it to the buggy state. It's worth acknowledging that English may not be the only language to not suffer this bug, but nobody has come forward with another.

Workarounds for the issue are a bit sparse, but they are at least simple. The first and most obvious is to switch to English under Settings -> Language & input -> Language, but anybody affected by this bug probably prefers their native tongue, so it isn't really ideal for most people. As an alternative, it's quite simple to use 3rd party tools to markup your images with coordinates. Apps like GeoTag and services like Google's own Photosphere generator make it simple to attach location data to the image after it has been generated.

Surprisingly, this issue has yet to be acknowledged by Google, despite being first reported just over a month ago. Given that the glitches occur on something as obscure as a locale setting, it's likely to be a very simple fix once an engineer has time for it. While a little missing data in photospheres are certainly not as critical as some of the other issues covered in previous Bug Watch posts, it's disappointing that an important component of this headlining feature hasn't received a bit more attention. Like all bugs, we expect to see this one knocked out pretty soon.

Personal Sensor System

DO-RA Is An Environmental Sensor That Plugs Into Your Phone & Tracks Radiation Exposure

TechCrunch by Natasha Lomas Yesterday

There’s a thriving cottage industry of smartphone extension hardware that plugs into the headphone jack on your phone and extends its capabilities in one way or another — feeding whatever special data it grabs back to an app where you get to parse, poke and prod it. It’s hard to keep track of the cool stuff people are coming up with to augment phones — whether it’s wind meters or light meters or even borescopes. Well, here’s an even more off-the-wall extension: meet DO-RA — a personal dosimeter-radiometer for measuring background radiation.

Granted, this is not something the average person might feel they need. And yet factor in the quantified self/health tracking trend and there is likely a potential market in piquing the interest of quantified selfers curious about how much background radiation they are exposed to every day. Plus there are of course obvious use-cases in specific regions that have suffered major nuclear incidents, like Fukushima or Chernobyl, or for people who work in the nuclear industry. DO-RA’s creators says Japan is going to be a key target market when they go into production. Other targets are the U.S. and Europe. It reckons it will initially be able to ship 1 million DO-RA devices per year into these three markets. The device is due to go into commercial production this autumn.

The Russian startup behind DO-RA, Intersoft Eurasia, claims to have garnered 1,300 pre-orders for the device over the last few months, without doing any dedicated advertising — the majority of pre-orderers are apparently (and incidentally) male iPhone and iPad owners. So it sounds like it’s ticking a fair few folks’ ‘cool gadget’ box already.

The DO-RA device will retail for around $150 — which Intersoft says is its primary disruption, being considerably lower than rival portable dosimeters, typically costing $250-$400. It names its main competitors as devices made by U.S. company Scosche, and Japanese carrier NTT DoCoMo. Last year Japan’s Softbank also announced a smartphone with an integrated radiation dosimeter, with the phone made by Sharp. This year, a San Francisco-based startup has also entered the space, with a personal environmental monitoring device, called Lapka (also costing circa $250), so interest in environmental-monitoring devices certainly appears to be on the rise.

DO-RA — which is short for dosimeter-radiometer — was conceived by its Russian creator, Vladimir Elin, after reading articles on the Fukushima Daiichi nuclear disaster in Japan, and stumbling across the idea of a portable dosimeter. A bit more research followed, patents were filed and an international patent was granted on the DO-RA concept in Ukraine, in November last year. Intersoft has made several prototypes since 2011 — and produced multiple apps, for pretty much every mobile and desktop platform going — but is only now gearing up to get the hardware product into market. (Its existing apps are currently running in a dummy simulation mode.)

So what exactly does DO-RA do? The universal design version of the gadget will plug into the audio jack on a smartphone, tablet or laptop and, when used in conjunction with the DO-RA app, will be able to record radiation measurements — using a silicon-based ionizing radiation sensor — to build up a picture of radiation exposure for the mobile owner or at a particular location (if you’re using it with a less portable desktop device).

The system can continuously monitor background radiation levels, when the app is used in radiometer mode (which is presumably going to be the more battery-draining option — albeit the device contains its own battery), taking measurements every four seconds. There’s also a dosimeter mode, where the app measures “an equivalent exposure over the monitoring period” and then forecasts annual exposure based on that snapshot.

The company lists the main functions of the DO-RA mobile device plus app as:

- Measuring the hourly/daily/weekly/monthly/annual equivalent radiation dose received by an owner of a mobile/smart phone;

- Warning on allowable, maximum and unallowable equivalent radiation dose by audible alarms/messages of a mobile/smart phone:”Normal Dose”, “Maximum Dose”, “Unallowable Dose”.

- Development of trends of condition of organs and systems of an owner of a mobile phone subject to received radiation dose;

- Advising an owner of a mobile/smart phone on prevention measures subject to received radiation dose;

- Receiving data (maps of land, water and other objects) on radiation pollution from radiation monitoring centres collected from DO-RA devices;

- Transferring collected data through wireless connection (Bluetooth 4.0) to any electronic devices within 10 meters.

Why does it need to transfer collected data? Because the startup has big data plans: it’s hoping to be able to generate real-time maps showing global background radiation levels based on the data its network of DO-RA users will ultimately be generating. To get the kind of volumes of data required to create serious value they’re also looking to shrink their hardware right down — and stick it inside the phone. On a chip, no less.

The DO-RA.micro design, which aims to integrate the detector into the smartphone’s battery, is apparently “under development” at present. The final step in the startup’s incredible shrinking roadmap is DO-RA.pro in which the radiation-sensing hardware is integrated directly into the SoC. “This advanced design is under negotiations now”, it says.

It will doubtless be an expensive trick to pull off, but if DO-RA’s makers are able to drive their technology inside millions of phones as an embedded sensor that ends up being included as standard they could be sitting atop a gigantic environmental radiation-monitoring data mountain. Still, they are a long way off that ultimate goal. In the meantime they are banking on building out their network via a universal plug-in version of DO-RA, which smartphone owners can use to give their current phone the ability to sniff out radiation.

In addition to the basic universal plug-in, they have created an apple-shaped version, called Yablo-Chups (pictured left), presumably aimed at appealing to the Japanese market (judging by the kawaii design). They are also eyeing the smartwatch space (but then who isn’t?), producing a concept design for an electromagnetic field monitoring watch that warns its owner of “unhealthy frequencies.” It remains to be seen whether that device will ever be more than vaporware.

All these plans are certainly ambitious, so what about funding? Elin founded Intersoft Eurasia in 2011 and has managed to raise around $500,000 to-date, including a $35,000 grant from Russia’s Skolkovo Foundation, which backs technology R&D projects to support the homegrown Tech City/startup hub. In September 2013 Intersoft says it’s expecting to get a more substantial grant from the Foundation — of up to $ 1 million — to supplement its funding as it kicks off commercial production of DO-RA. It also apparently has private investors (whose identity it’s not disclosing at this time) willing to invest a further $250,000.

Even so, DO-RA’s creators say they are still on the look out for additional investment — either “in the nuclear sphere” or a “big net partner to promote DO-RA” in their main target markets. Additional investment is likely required to achieve what Intersoft describes as its “main goal”: producing a microchip with an embedded radiation sensor. That goal suggests that the current craze for hardware plug-ins to extend phone functionality may be somewhat transitionary — if at least some of these additional sensors can (ultimately) be shrunk down and squeezed into the main device, making mobiles smarter than ever right out of the box.

TechCrunch’s Steve O’Hear contributed to this article

100GB BlueRay

Triple-layer Blu-ray discs for 4K video teased with 100GB capacity

updated 06:48 am EDT, Thu September 12, 2013

Blu-ray discs for 4K content are already being made, with one disc producer claiming to be testing the production of the 100GB-capacity media. Singulus has revealed it has created its new replication line for triple-layer Blu-ray discs, ahead of an expected announcement of the technology from the Blu-ray isc Association.

The confirmation, in the form of a press release spotted by TechRadar, states that the 100GB figure comes from not only using three layers rather than two or one, but also that a change in the data compression method allows for up to 33GB of information to be stored on each layer, instead of the current 25GB.

The BDA has yet to formally announce an enhanced Blu-ray format, but a spokesperson speaking to TechRadar hopes that it can be revealed before the end of this year. It is keen to avoid another format war, such as that between Blu-Ray and HD-DVD, though it is quick to point out that competing systems, such as the joint effort by Sony and Panasonic on 300GB optical disc storage, are more for enterprise, with the final version heading to consumers consisting of a single optical disc.

A new high-density media format is likely to help the adoption of Ultra HD televisions, due to current media distribution issues. Testing by Netflix and Sony's launched 4K download service will be hampered by those using low-bandwidth connections, while 4K-resolution television broadcasts are still only in testing.

Read more: http://www.electronista.com/articles/13/09/12/blu.ray.disc.producer.outs.format.before.official.announcement/#ixzz2egXpWotx

300GB Optical Disks

Sony, Panasonic working on optical discs capable of storing 300GB

Electronista | The Macintosh News Network 9:41 am

Sony and Panasonic are collaborating on a new optical disc format with a potential recording capacity of at least 300GB. The basic agreement between the two companies to develop the standard seeks to create professional-use optical discs aimed at the archiving market, and hopes to start selling the new discs to enterprise customers by the end of 2015....

Sony and Panasonic are collaborating on a new optical disc format with a potential recording capacity of at least 300GB. The basic agreement between the two companies to develop the standard seeks to create professional-use optical discs aimed at the archiving market, and hopes to start selling the new discs to enterprise customers by the end of 2015....

Wednesday, October 9, 2013

Video Edition across and between

Teams

TechCrunch by Ryan Lawler Yesterday

Over the years, the content creators have benefited from more powerful video editing software, but as the tools for editing have improved, the way content creators manage those edits hasn’t kept pace. These days, videos are produced and edited by widely distributed teams that mostly use email and spreadsheets to keep track of all the different assets and revisions that are being worked on.

First Cut Pro, which is launching at TechCrunch Disrupt today, seeks to change all that. It provides editing teams with a cloud-based tool for collaborating and monitoring all the different changes that are made to videos during the post-production process.The tool allows for editors to submit video files, which can then be watched directly through the platform. Different stakeholders can then make comments or feedback directly within the platform, which will pause the video being watched and time-stamp where revisions should be made. That’s a big step forward from how the process is normally done today — which includes lots of pausing videos, going into spreadsheets and manually making notes of timestamps and revisions.

Users can respond to feedback inline on the platform, or they can respond from email notifications that are sent whenever changes or comments have been made to a project. It allows stakeholders to manage multiple projects and revisions at once, and integrates directly into the editing environment.

First Cut Pro supports the ability to export markers into .CSV files for spreadsheets, .XML metadata files for Adobe Premier, After Effects and Apple’s Final Cut Pro, as well as .txt files for importing into AVID Media Composer. That said, videos aren’t stored in the cloud — they’re stored locally on the viewer’s computer or on network attached storage. That means that high-quality versions of the videos can be viewed instantly.

The platform has a SaaS-based licensing model for users, with variable pricing based on the number of collaborators and projects that are being worked on at once. Pricing starts free for individual accounts with up to five collaborators. If you need more than that, freelancer accounts start at $39 a month and go up to $119 a month for power users with up to 20 collaborators and 7 projects. Above that, and it can work on enterprise pricing plans.

Question & Answer

Q: How big of a problem is this?

A: Between movies, TV, and ads, there’s probably $30 billion spent on post-production. There’s probably 20,000 ad agencies. Just in advertising, it’s pretty sizable. Video is literally ubiquitous.

Q: How do you get the client to log in?

A: Our goal was to make it extremely easy to use. However if you do want to have an ongoing relationship, client gets notified when changes are made.

Q: What were customers using before this?

A: Typically they use emails and phone calls. One client used spreadsheets. You keep content on servers and we’ll work with that.

Q: How do you capture customers?

A: Today it’s very freelancer-focused. They work with different clients and use this tool, getting those clients to also use them.

Sony DROIDcams

Sony's Camera Remote API allows WiFi-equipped devices to control its cameras, act as a second screen

Engadget by Edgar Alvarez 2:58 am

This year's IFA has been rather eventful for Sony: the company unveiled a new handset, some interesting cameras and even a recorder that can turn you into the next Justin Bieber. But lost in the shuffle was an announcement that the Japanese outfit's also releasing its Camera Remote API, albeit in beta. Sony says the idea here is to provide developers with the ability to turn WiFi-ready devices, such as smartphones and tablets, into a companion for many of its shooters -- i.e. act as a second display or be able to shoot images / video remotely.

The Camera Remote API will be friendly with novel products including theAction Cam HDR-AS30, HDR-MV1 Music Video Recorder and both DSC-QX lens cameras, as well as older models like the NEX-6, NEX-5R and NEX-5T. This is definitely good news for current and future owners of any of the aforementioned, since the new API can certainly add much more value to Sony's cameras via the third-party app creations that are born from it.

Filed under: Cameras, Software, Sony

This year's IFA has been rather eventful for Sony: the company unveiled a new handset, some interesting cameras and even a recorder that can turn you into the next Justin Bieber. But lost in the shuffle was an announcement that the Japanese outfit's also releasing its Camera Remote API, albeit in beta. Sony says the idea here is to provide developers with the ability to turn WiFi-ready devices, such as smartphones and tablets, into a companion for many of its shooters -- i.e. act as a second display or be able to shoot images / video remotely.

The Camera Remote API will be friendly with novel products including theAction Cam HDR-AS30, HDR-MV1 Music Video Recorder and both DSC-QX lens cameras, as well as older models like the NEX-6, NEX-5R and NEX-5T. This is definitely good news for current and future owners of any of the aforementioned, since the new API can certainly add much more value to Sony's cameras via the third-party app creations that are born from it.

Filed under: Cameras, Software, Sony

Sony Hopes You'll Carry A Lens In Your Pocket

TechCrunch by Matt Burns Yesterday

Remember that time when your smartphone camera just wasn’t good enough? That time when you wanted a picture that was just slightly better? You know, the time you lugged a pocket camera to your kid’s karate lesson or significant other’s colonoscopy. Well. Sony will soon have a product for you, friend!

The Sony QX10 and QX100 are leaking from all corners of the Internet following their surprise appearance last month. The products are essentially two-thirds of a camera designed to connect to a smartphone wirelessly or through a dock. Sony has created a whole new system that replaces a phone’s camera with a new sensor and glass. For better or worse, of course.

According to Sony Alpha Rumors, the QX10 will feature a 1/2.3-inch 18-megapixel sensor paired with an f/3.3-5.9 lens. The QX100 will have a high-quality 1-inch 20.2-megapixel Exmor R sensor and a f/1.8-4.9 Carl Zeiss lens. Reportedly, the QX10 will be $250 and the QX100 will be $450. The QX line is based on fantastic Sony point-and-shoot cameras with the QX10 looking most like the WX150 and the QX100 grabbing most of the RX100m2′s magic.

The concept is solid, but the market might be tepid. With the right software, a smartphone packs all the goods necessary to process a photo. These products essentially allow smartphones to capture higher-quality images and more importantly, share these images a whole lot quicker.

It’s just too bad these first-generation models are so expensive.

Let’s not forget this has been done before. Will.i.am and Fusion Garage (and CrunchPad engineer) Chandra Rathakrishnan beat Sony to this idea with the fashion-focused i.am+ foto.sosho V.5. But it doesn’t appear to have ever hit the market. Thankfully. It was ludicrous and smelled of vapor from the start.

Sony’s take is much more legitimate and original. As Chris explained when the products first started leaking online, Sony has created a product that moves the camera hardware outside of the smartphone, creating a platform that’s device-agnostic and gives consumers the option of using this device on future hardware.

Don’t expect these little lenses to be a huge hit right out of the gate. Sony probably doesn’t. This is clearly a low-volume product designed to test the market. But Sony as of late is back to its slow and steady product cycle. This product line is a clever cross between two of the company’s main product categories with mobile and digital imaging. Sony is going to do its damnedest to get consumers to carry a lens in their pocket instead of a pocket shooter.

Sony QX10 and QX100 Press Release Leaked, Pricing and Full Details Now Known

Droid Life: A Droid Community Blog by Tim-o-tato Yesterday

Why wait for IFA and Sony’s official announcement when we live in this amazing digital age? Today, a press release and product video for the SonyQx10 and QX100 hit the web, so now there are no more secrets about these two accessories. If you wanted to know pricing, availability and full specs, then read on.

The QX100 will be the more expensive of the two, coming in at around $500. It features the same sensor that is found in the RX100 II, capable of taking very low-noise and “exceptionally detailed” photographs. The QX10 will come priced in at around $250, featuring an 18.2 megapixel Exmor R™ CMOS sensor and 10x optical zoom.

Both lens-style cameras work over WiFi to connect to any Android device that is running Honeycomb or over. Connecting to devices is easy, with NFC capabilities that allow users to easily bump the two devices to begin the connection. From there, you can either use a special attachment to connect the lens to your phone or even utilize a special tripod for taking still shots.

The devices will be available later this month through Sony’s online store. Interested?

Click here to view the embedded video.

Via: Sony Alpha RumorsNew Sony QX100 and QX10 “Lens-Style Cameras” Redefine the Mobile Photography ExperienceNew Concept Cameras Link Flawlessly to Smartphone, Offering High-Zoom, Stunning Quality Images and Full HD Videos for Instant SharingMerging the creative power of a premium compact camera with the convenience and connectivity of today’s smartphones, Sony today introduced two “lens-style” QX series cameras that bring new levels of fun and creativity to the mobile photography experience.

The innovative Cyber-shot™ QX100 and QX10 models utilize Wi-Fi® connectivity to instantly transform a connected smartphone into a versatile, powerful photographic tool, allowing it to shoot high-quality images and full HD videos to rival a premium compact camera. It’s an entirely new and different way for consumers to capture and share memories with friends and family.

With a distinct lens-style shape, the new cameras utilize the latest version of Sony’s PlayMemories™ Mobile application (availablefor iOS™ and Android™ devices, version 3.1 or higher required) to connect wirelessly to a smartphone, converting the bright, large LCD screen of the phone into a real-time viewfinder with the ability to release the shutter, start/stop movie recordings, and adjust common photographic settings like shooting mode, zoom, Auto Focus area and more.

For added convenience, the app can be activated using NFC one-touch with compatible devices. Once pictures are taken, they are saved directly on both the phone and the camera*, and can be shared instantly via social media or other common mobile applications.

“With the new QX100 and QX10 cameras, we are making it easier for the ever-growing population of ‘mobile photographers’ to capture far superior, higher-quality content without sacrificing the convenience and accessibility of their existing mobile network or the familiar ‘phone-style’ shooting experience that they’ve grown accustomed to,” said Patrick Huang, director of the Cyber-shot business at Sony. “We feel that these new products represent not only an evolution for the digital camera business, but arevolution in terms of redefining how cameras and smartphones can cooperatively flourish in today’s market.”

The new compact, ultra-portable cameras can be attached to a connected phone with a supplied mechanically adjustable adapter, or can be held separately in hand or even mounted to a tripod while still maintaining all functionality and connectivity with the smartphone. They can also be operated as completely independent cameras if desired, as both the QX100 and QX10 cameras have a shutter release, memory card slot and come with a rechargeable battery.

Premium, Large-Sensor QX100 CameraThe Cyber-shot QX100 camera features a premium, high-quality 1.0 inch, 20.2 MP Exmor R™ CMOS sensor. Identical to the sensor found in the acclaimed Cyber-shot RX100 II camera, it allows for exceptionally detailed, ultra-low noise images in all types of lighting conditions, including dimly lit indoor and night scenes.

The sensor is paired with a fast, wide-aperture Carl Zeiss Vario-Sonnar T* lens with 3.6x optical zoom and a powerful BIONZ image processor, ensuring beautifully natural, detail-packed still images and Full HD videos. As an extra refinement, the QX100 sports a dedicated control ring for camera-like adjustment of manual focus and zoom.

Several different shooting modes can be selected while using the QX100 including Program Auto, Aperture Priority, Intelligent Auto and Superior Auto, which automatically recognizes 44 different shooting conditions and adjusts camera settings to suit.

High-Zoom Cyber-shot QX10 modelBoasting a powerful 18.2 effective megapixel Exmor R™ CMOS sensor and versatile 10x optical zoom Sony G Lens, the Cyber-shot QX10 camera allows mobile photographers to bring distant subjects closer without sacrificing image quality or resolution, a common problem in smartphones. It’s also extremely portable and lightweight – weighing less than 4 oz and measuring about 2.5”X2.5”x1.3”, it’s a great tool for travel photography.

Additionally, the camera has built-in Optical SteadyShot to combat camera shake, keeping handheld pictures and videos steady and blur-free. It has Program Auto, Intelligent Auto and Superior Auto modes to choose from, and will be available in two different colors – black and white.

Pricing and AvailabilityThe new Cyber-shot QX100 and QX10 lens-style cameras will available later this month for about $500 and $250, respectively.

The cameras and a range of compatible accessories including a soft carry case and dedicated camera attachment for Sony Mobile phones like the Xperia™ Z can be purchased at Sony retail stores (www.store.sony.com) and other authorized dealers nationwide.

Please visit www.blog.sony.com for a full video preview of the new Sony Cyber-shot QX Series cameras and follow #SonyCamera on twitter for the latest camera news.

Spike the Punch

Spike Kickstarter Project Puts Accurate Laser Measurement Hardware Right On Your Smartphone

TechCrunch by Darrell Etherington 3:01 am

Smartphones have pretty good cameras, but nowhere near good enough to do the kind of high accuracy measurement work that’s required for engineering or remodelling projects. Enter Spike, a new smartphone attachment designed by ikeGPS, a company that specializes in building fit-for-purpose laser hardware for use in surveying and 3D modelling.

The Spike is version of their solution that attaches to the back of a smartphone and integrates directly with software on those devices to make it possible to measure objects and structures accurately from up to 600 feet away, just by taking a picture with your device. The accessory itself ads a laser range finder, advanced GPS a 3D compass and another digital camera to your smartphone’s existing capabilities, and it’s much more portable than existing solutions (pocketable, even, according to ikeGPS).

The benefits of the Spike and its powers are evident for the existing market ikeGPS already sells to; telecom and utility companies, architects, city planners, builders and more would be better served with a simple portable accessory and the phone they already have in their pocket than by specialized equipment that’s heavy, bulky, requires instruction on proper use and lacks any kind of easy instant data portability like you’ll get from a smartphone app’s “Share” functions.

But ikeGPS is after a new market segment with the Spike, too. It says the device is “built for developers & hackers,” and they suggest augmented reality as a possible consume applications, but are interesting in seeing exactly what the dev community can come up with via its full-featured API. Laser accurate measurements could indeed bring interesting features to location-based apps, though Spike is clearly more interested in letting developers more experienced with that segment of the market figure out the details.

Spike plans to eventually build a case attachment to make it compatible with any phone and case combo, though at launch it’ll be doing this via a CAD model which owners of the device can use to get mounts 3D printed themselves. It’ll work a bit like the Sony QX10 and QX100 smartphone camera lens accessories, it sounds like, and make it possible to use with any iOS or Android device.

The goal of Spike’s founding team, which includes founder and CTO Leon Toorenburg, who built ikeGPS (neé Surveylab) to fit the needs of professionals, is to make this kind of tech widely available. It’s another example of costs associated with tech decreasing quickly, and making it ultimately possible to provide something that once required a professionally trained operator and expensive, specialized hardware usable by anyone with a phone. ikeGPS techhas been used by UN and US Army engineers in disaster recover and emergency response, and now its team wants to make those same capabilities open to app developers. Others like YC company Senic are looking to accomplish similar things, but Spike’s vision is much more sweeping at launch.

The project is just over halfway to its $100,000 funding goal, and $379 scores backers a pre-order unit, which is scheduled to ship in April next year. Building a consumer device is different from building very specialized hardware on what’s likely a made-to-order basis, but at least the team has the know-how and experience to make its tech actually work.

Tuesday, October 8, 2013

GPS stuff - the essence FYI

https://www.sparkfun.com/pages/GPS_Guide

GPS Buying Guide

Above your head, right now, at an altitude of about 20,200 km there is a system of navigation satellites. There’s 35 of them, 24 active at any given time. It cost millions and millions of dollars to put those satellites in the sky and link them appropriately and you know what they’re doing?

They’re helping you find that place your friend told you about that one time.

The GPS, or Global Positioning System, is accessible from almost everywhere on Earth and provides exact coordinates of your current location so that you can figure out where you are. Combine that information with a good map and there’s nothing you can’t find. But what if you want that uncanny sense of direction in, say, your pet robot? Good news, GPS modules are small, light weight and inexpensive. They’re also pretty easy to use.

There are a ton of GPS modules on the market these days and it can be hard to figure out what you need for your project, hopefully this guide will demystify GPS a little bit and get you on the right track.

Actually, tell you what: Go read about GPS on Wikipedia, it’s a great article to get you familiar with the technology. Our job is to teach you how to use it… Now how do we actually use it? It’s gloriously simple. Every GPS module works the same: power it, and within 30 seconds to a minute, it will output a string of ASCII characters like this:

GPGGA, GPGLL,

GPGSA, GPGSV,

GPRMC, GPVTG

PhotoSpheres gets a home

Introducing “Views” - A new way to contribute your 360° photo spheres to Google Maps

Google LatLong by Lat Long (noreply@blogger.com) 1:37 pm

Wherever life’s adventures may take us, our photos help us remember and share the places we care about. When photos are added to a map, whether they’re from your camera or through Street View, they record unique experiences that collectively create the story of a place and what it looked like at a particular moment in time.

Today, we are launching a new community site called Views that makes it easy for people to publicly share their photos of places by contributing photo spheres to Google Maps (photo spheres are 360º panoramas that can be easily be created with your Android phone).

Below is a screenshot of the new Google Maps for desktop, showing a photo sphere that I shared one morning in Hawaii while my wife and I walked along the beach near our old neighborhood. The thumbnails at the bottom show our comprehensive photo coverage, with each image accurately placed on the map.

A photo sphere in the new Google Maps. Can you feel the sand between your toes?

Photo spheres can be created with the camera in Android 4.2 or higher, including most Nexus devices and the new Nexus 7 tablet. This short video will show you how to get started. You can also share panoramas you’ve created with your DSLR camera (learn more on our help center).

To upload 360º photo spheres, just sign into the Views site with your Google+ profile and click the blue camera button on the top right of the page. This will enable you to import your existing photo spheres from your Google+ photos. You can also upload 360º photo spheres to Views from the Gallery in Android by tapping “Share” and then selecting Google Maps.

Below you can see my Views page, which is filled with photo spheres and descriptions about my experiences in the places I’ve visited. I created these during my travels, including day trips and hikes around the San Francisco bay area, as well as far away adventures to Hawaii, Sydney, Beijing, andParis. Sometimes I also share photo spheres around the Google campus in Mountain View. You can explore them all on this map.

Screenshot of my Views page

Since Views also incorporates the Street View Gallery, you can check out incredible panoramas of our most popular Street View collections, from theGrand Canyon to the Swiss Alps. Just click on “Explore” at the top of the Views site to browse a map of these special collections right alongside community-contributed photo spheres.

So, when you’re on your next adventure, don’t forget your camera and your Android phone to create and share some photo spheres of places that inspire you... we can’t wait to see them!

View Larger Map

Posted by Evan Rapoport, Product Manager, Google Maps & Photo Sphere

Google Rolls Out New “Views” Site For Geotagging And Sharing Your Android Photo Spheres

TechCrunch by Chris Velazco 3:12 pm

If you’ve got an Android device running version 4.2 or later, chances are you’ve tried capturing a photo sphere — one of those nifty little 360-degree panoramas that let you spin around to capture your surroundings until vertigo sets in. Instead of just letting those photo spheres languish on your phone or on your Google+ account, though, Google has thought up something awfully keen for them.

You know what I’m getting at (the headline was probably hint enough). Google has fired up a new Views page that lets users tie their photo spheres to specific locations for when static maps and satellite fly-bys just aren’t immersive enough.

The process is simple enough: once you’re logged in to Google+ and mosey over to the Views page, you’re given the option to import all of the photo spheres stored in your Google+ account. Haven’t uploaded them to Google+ yet? That’s fair — you can upload them to Google Maps straight from the stock Android gallery app, too. Google Product Manager Evan Rapoport also confirmed that users who share those photo spheres will also be able to view them from their own Views user page, which looks a little something like this. As you’d expect, you’ve got easy access to a grid of all your photo spheres, but a single click lets you pull out into a wider map view to see where all of those spheres were captured.

It’s all rather neat, but to be quite honest it’s about time Google managed to make the whole photo sphere experience meatier. Sure, they’re easy enough to shoot, and the end results are generally pretty impressive, but users were always fairly limited with what they could do with those photo spheres after the fact. At least now users who have dedicated themselves to creating awesome photo spheres (I’m sure there are more than a few people who fall into that category) have a centralized spot to show off some of their most impressive work. Of course, it’s not hard to see how Google benefits from this.

As intrepid as Google’s crew of drivers and trekkers are, there’s only so much in terms of resources the company can devote to making the world’s varying locales accessible from a web browser. Now that it’s easier for folks to share their photo spheres, Google could theoretically serve up on-the-ground views of any (human-accessible) location by displaying those photo spheres in Google Maps proper. I wouldn’t expect Google to try anything quite that bold until Android 4.2′s adoption figures swell a bit, but it’s certainly something to keep an eye out for.

ViewNX2

Nikon ViewNX 2.8.0 released

Nikon Rumors by [NR] admin 10:51 am

Nikon released a new version of ViewNX. Modifications enabled with 2.8.0 for both the Windows and Macintosh versions are:

- Support for the COOLPIX L620 has been added.

- GeoTag in the toolbar has been changed to Map. In addition, GPS data and GPS information have been renamed Location data.

- The following support has been added for Motion Snapshots:

- Slideshow function

- When Short Movie Creator or Movie Editor is selected from the Open With... option in the File menu, users can now choose Movies (MOV) and JPEG images or Movies (MOV).

- However, to choose one of the above, Short Movie Creator or Movie Editor must be registered in Open With > Register... > Open with Application beforehand.

- An Add/Edit Directional option has been added to the Map toolbar.

- A Merge Altitude Log with Track Log... option has been added to the Log Matching item.

A location log saved with a camera, mobile phone, or other commercially available satellite navigation system receiver capable of acquiring location data can now be merged with an altitude (barometer) and depth log* saved using a Nikon digital camera. - Altitude (barometer) and depth logs are recorded by Nikon digital cameras equipped with barometers or depth gauges.

- Installation of QuickTime is no longer required.

- Frame rate options of 50 fps and 60 fps are now available for movies created using the Create Movie... item in the Nikon Movie Editor File menu and output at a size of 1280 x 720 (16:9) and 1920 x 1080 (16:9). However, the following two requirements apply when 50 fps or 60 fps is selected for Frame rate:

- Only movies have been added to the storyboard (no still images)

- An option other than MOV (MotionJPEG/Linear PCM) is selected for File type

Additional modifications to the Macintosh version

- Image display with the slideshow function is now compatible with Retina Display.

Marines to Bring their Own Device HuRah!!

Enterprise IT

Marine Corps mobile device strategy looks to cut costs

- By Nicole Grim

- Jul 26, 2013

Squeezed by budget cuts and seeking to leverage commercial technologies, the U.S. Marine Corps is adopting a new approach to its mobile device strategy as a way to creatively cut costs while getting secure handsets into the hands of more service personnel. In a keynote presentation at the Defense Systems Innovation Forum on July 25, Rob Anderson, chief of the Marine Corps’ vision and strategy division, outlined the strategy that leverages collaboration with commercial partners and other federal agencies.

Anderson said the requirements of the Marine Corps’ mobile strategy focus on security, collaboration and cost effectiveness to deliver a secure mobile device capability. For command and control, this approach will enhance the service’s ability to collect and share data in real time on the battlefield.

Another focus of the Marine strategy is incorporating personal mobile devices into service networks.

Known as bring your own device, or BYOD, the approach allows users to gain limited access to an agency’s secure network and content. The personal device would run two operating systems simultaneously, giving users the best of commercial and defense enterprise services. “This is something that a lot of people in the dotmil [domain] would like to do, but we’re not there yet,” said Anderson. “However, we believe that there is already precedence in personal devices connecting to the defense infrastructure network.”

In a variation on the traditional BYOD approach, Anderson said the Marine Corps aims to allow users to “bring [their] own approved devices,” which are instead procured by the government but paid for by the user, managed by a service provider and supported by leveraging the platforms of other agencies like the Defense Information Systems Agency.

“Just for our BlackBerries today… 55 percent of [our costs] are on maintenance. It’s not on voice and data plans,” Anderson said, adding that there are only two ways to save money: eliminate users or reduce infrastructure requirements.

“Our mobility strategy is based straight up on IT investment opportunities,” he said. “In other words, cost avoidance.”

To reduce the 15,000 current users with government-procured devices, the Marine Corps will draw tighter distinctions between users whose missions are either essential or critical and those whose missions are not. Under the plan, these “privileged” users will supply their own device and data plan. Unlike their non-privileged counterparts, these users' expenses will be partially reimbursed by the Marine Corps.

In another cost cutting move, Anderson said the Marine Corps will rely on commercial providers rather than federal agencies for mobile device management (MDM). Anderson said he has talked extensively with Sprint, Verizon and AT&T to develop an implementation plan that would allow the service to outsource its security policies.

“The DOD commercial device implementation plan [talks] about rolling all the MDMs within the services up to DISA. Well, our intent is to not have a MDM at all, and have a carrier manage that -- based upon the DISA’s security policies.”

Anderson also envisions savings by simply not building platforms already in development. “We will not build a classified environment to support mobility,” he said. “We do not have the money for it.”

Instead, it will rely on DISA initiatives to fill the gap. Similarly, citing a contract DISA awarded in June to Digital Management Inc. for mobile application and device management, Anderson said the service will not create an application store for its users. “Why would we spend money on something that DISA already spends money on?”

In August, the service will distribute a survey to potential users in order to gauge interest in the BYOD program. Anderson said he hopes the incentive to gain secure access with a personal device will be sufficient for users to pay for their own plans. Next, data testing will occur with 20 users to confirm that commercial carriers can provide MDM. If successful, a pilot testing program with 500 users is expected to begin by early 2014, with a system deployed across the entire Marine Corps by next July.

The greatest challenge, Anderson concluded, is proving the business case to commercial carriers. Without carrier agreements in place to supply trusted handheld devices, the Marine Corps will be forced to shift to an alternative mobile security strategy, he warned.

Subscribe to:

Posts (Atom)